Easily

Open source low-code tool for developers to build customized LLM orchestration flow & AI agents

Backed byCombinator

Trusted and used by teams around the globe

Iterate, fast

Developing LLM apps takes countless iterations. With low code approach, we enable quick iterations to go from testing to production

- $ npm install -g flowise

- $ npx flowise start

Features 01

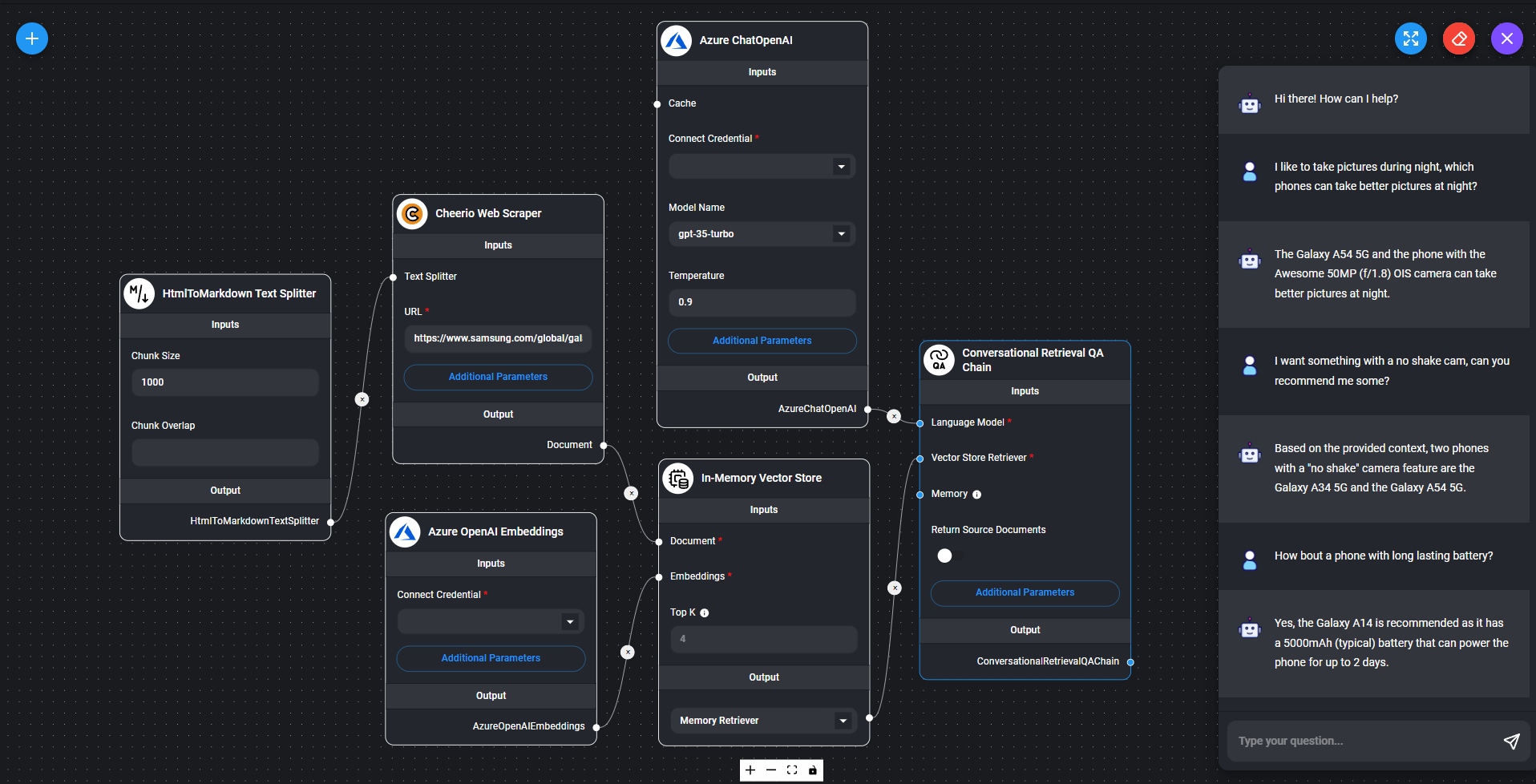

Chatflow

LLM Orchestration

Connect LLMs with memory, data loaders, cache, moderation and many more

- Langchain

- LlamaIndex

- 100+ integrations

Agents

Agents & Assistants

Create autonomous agent that can uses tools to execute different tasks

- Custom Tools

- OpenAI Assistant

- Function Agent

- import requests

- url = "/api/v1/prediction/:id"

- def query(payload):

- response = requests.post(

- url,

- json = payload

- )

- return response.json()

- output = query({

- question: "hello!"

- )}

Developer Friendly

API, SDK, Embed

Extend and integrate to your applications using APIs, SDK and Embedded Chat

- APIs

- Embedded Widget

- React SDK

Features 02

Platform Agnostic

Open source LLMs

Run in air-gapped environment with local LLMs, embeddings and vector databases

- HuggingFace, Ollama, LocalAI, Replicate

- Llama2, Mistral, Vicuna, Orca, Llava